In the latest addition to our data-driven culture series, we take a look at metrics and experimentation. As an accelerator, we come across a lot of start-ups that position themselves as data-driven, but we have realized that there is no common understanding of what such positioning means. There is also the misconception that data is complex, abstract and out of reach. This however doesn’t need to be the case.

So what does it mean to have a data-driven culture?

We think there are four key core pillars:

i) Define success and what needs to be measured

ii) Have a culture of testing and learning

iii) Take time to design an agile data infrastructure

iv) Ensure everyone in the team owns data-driven processes

Here, we discuss metrics and experimentation, and will focus on the first two pillars:

Defining success and what needs to be measured

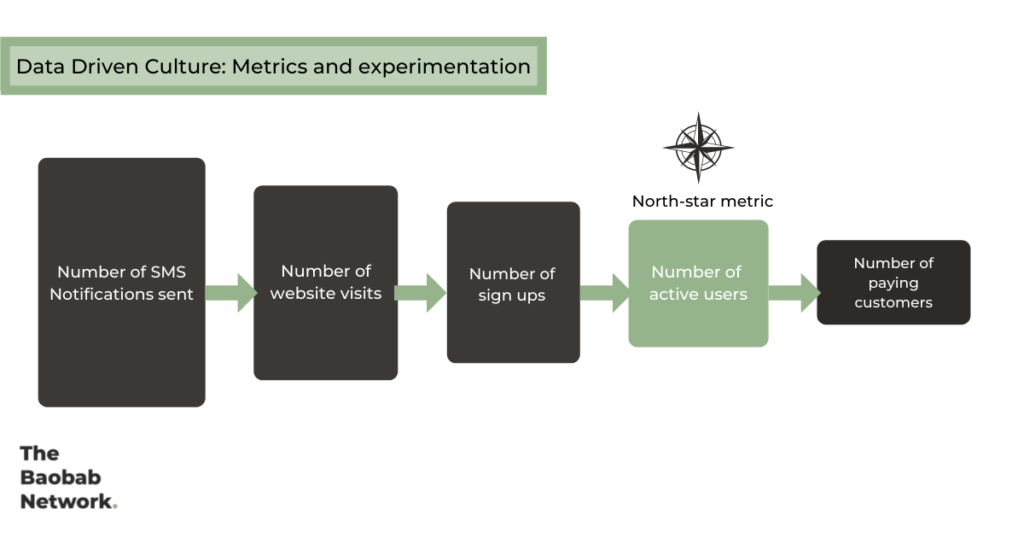

Data-driven start-ups are very intentional about setting the right goals and measuring results. This involves clearly defining what is valued in the company and finding tangible metrics that can articulate this within a specific time frame. To ensure clarity, simplicity and alignment, a team should pick a single “north-star” metric that can be used to measure the start-up’s success. Ideally, your company’s north-star metric ought to be connected to important drivers within the business while also being actionable i.e. the team has the ability to influence the metric.

Here is an example of how a newly launched B2C company could go about identifying a good north-star metric.

The goal: Drive customer acquisition

The drivers: The team was able to collect a list of phone numbers from potential customers when conducting consumer research. They plan to initially send SMS notifications to drive traffic to their website. They expect only a fraction of the people who receive SMS notifications to visit the website, a smaller fraction to sign up, an even smaller fraction to be active users, and only a few would be paying customers.